This interactive tutorial takes you through the steps of how to run the Thunderstorm Identification, Tracking, Analysis and Nowcasting (TITAN) suite. TITAN was originally designed as an algorithm to objectively identify and track thunderstorms from weather radar data for a weather modification experiment in South Africa in the 1980s. Now, Titan includes forecasting, storm analysis, and climatological analysis. TITAN now refers to the larger system in which the original application is one component.

Titan is described in more detail in Dixon and Wiener (1993).

Titan Background¶

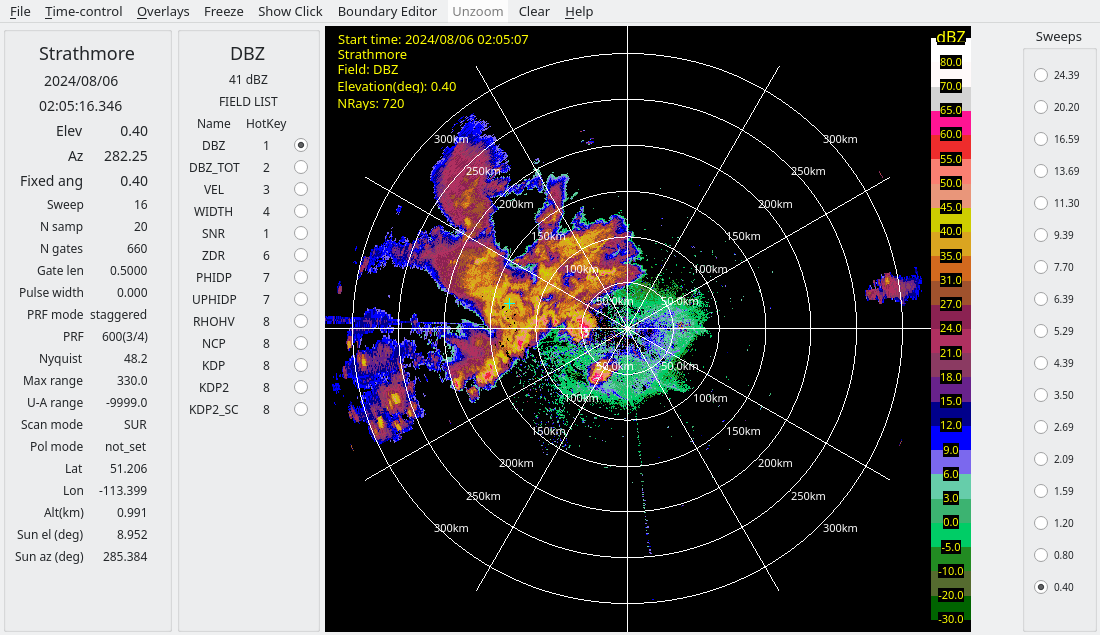

TITAN identifies storm objects as a contiguous region of echo that exceeds a user-defined reflectivity threshold and minimum volume. Dual thresholds are used to deal with storm objects that briefly touch, but do not merge. Storm tracking is performed by looking for regions of overlap between storm objects at successive time intervals. Short term storm extrapolation forecasts are used to identify instances of storm merging and splitting. TITAN output includes storm tracks, polygons outlining the storm objects, and storm property information (e.g., volume, area, mass, precipitation flux).

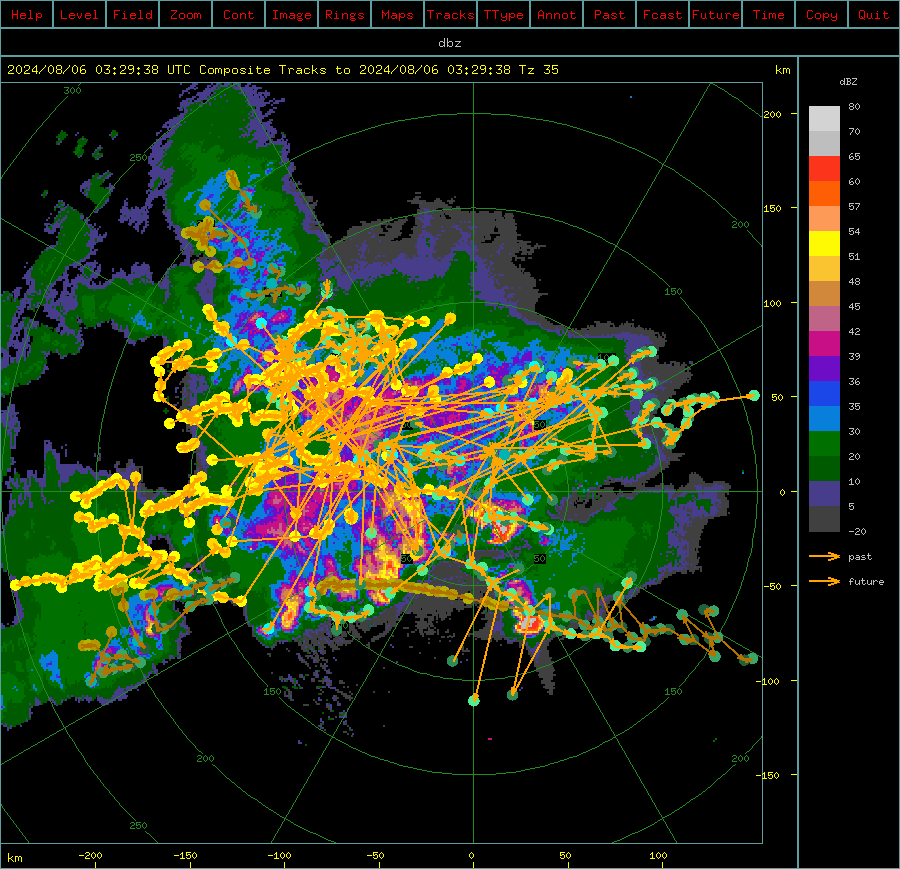

The high-level workflow for TITAN is shown in the graphic below. Key steps include quality controlling the data to remove any non-meteorological or compromised echoes and gridding the data to a Cartesian grid. Once TITAN is run and the tracks are produced, those data need to be converted into more user-friendly file types.

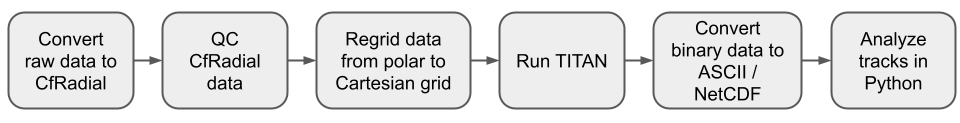

A more detailed workflow for TITAN that includes each step, application, and data type is shown in the graphic below.

Tutorial Overview¶

1. Setup¶

Download raw data and prepare parameter files¶

Raw data files that are provided:

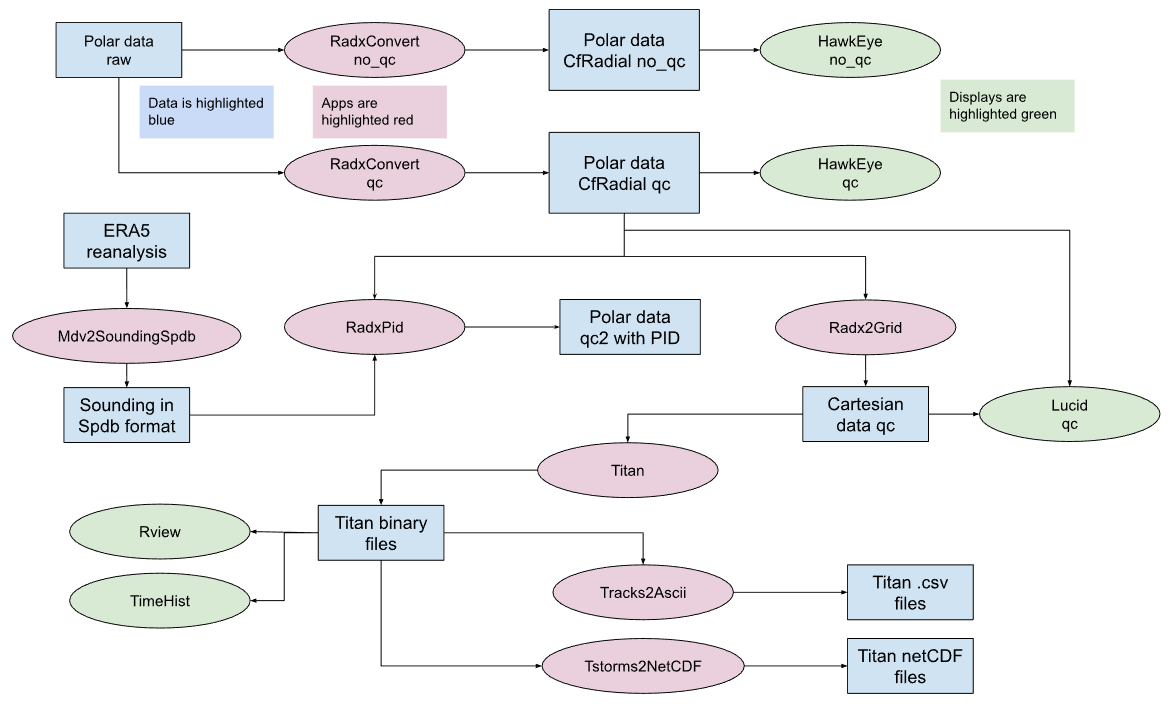

A hail storm in Alberta, observed by the Strathmore radar 40 km east Calgary.

A derecho event in Ontario, observed by the King City radar 40 km north of Toronto.

Both of these are 10 cm (S-band) Gematronik dual polarization radars.

The data (as a .tgz file) has been provided in the form of a zipped tar file, which we will unzip create the following tree:

./data/titan/ERA5/20220521

./data/titan/ERA5/20240806

./data/titan/radar/raw/hail/20240806*.h5

./data/titan/radar/raw/derecho/20220521*.h52. Output data¶

After the full analysis has been run, the following derived data directories should exist:

./data/titan/ERA5/spdb/Strathmore/20240806* (soundings from ERA5)

./data/titan/ERA5/spdb/KingCity/20220521* (soundings from ERA5)

./data/titan/radar/cfradial/qc/Strathmore/20240806/cfrad.20240806*nc (cfradial after QC)

./data/titan/radar/cfradial/qc/KingCity/20220521/cfrad.20220521*nc (cfradial after QC)

./data/titan/radar/cfradial/pid/Strathmore/20240806/cfrad.20240806*nc (cfradial PID)

./data/titan/radar/cfradial/pid/Strathmore/20240806/cfrad.20240806*nc (cfradial PID)

./data/titan/radar/cart/qc/Strathmore/20240806/ncf_20240806*nc (Cartesian MDC CF-compliant netcdf)

./data/titan/radar/cart/qc/KingCity/20220521/ncf_202205216*nc (Cartesian MDC CF-compliant netcdf)

./data/titan/titan/storms/Strathmore/20240806* (Titan binary files)

./data/titan/titan/storms/KingCity/20220521* (Titan binary files)

./data/titan/titan/ascii/Tracks2Ascii.hail.txt (Titan output converted by Tracks2Ascii)

./data/titan/titan/ascii/Tracks2Ascii.derecho.txt (Titan output converted by Tracks2Ascii)

./data/titan/titan/netcdf/Strathmore/titan_20240806.nc (Titan output converted by Tstorms2NetCDF)

./data/titan/titan/netcdf/KingCity/titan_20220521.nc (Titan output converted by Tstorms2NetCDF)3. Note on task cells¶

This notebook uses two colored cells to indicate tasks.

These text blocks help the user modify the parameter files or other functions in external text files.

These text blocks instruct the users to run a command in a cell within the Jupyter notebook. If you prefer, you are welcome to copy the commands (minus the ! symbol) into a terminal window.

First, we import the required python packages to run this notebook. Most of the LROSE processing can be done with the os package and shell commands.

import os

import subprocess

import warnings

warnings.filterwarnings("ignore")

import os

import fsspec

import glob

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.ticker as plticker

from matplotlib.lines import Line2D

import cartopy.crs as ccrs

import cartopy.io.shapereader as shpreader

import cartopy.geodesic as cgds

from cartopy.mpl.gridliner import LONGITUDE_FORMATTER, LATITUDE_FORMATTER

from cartopy import feature as cfeature

import xarray as xr

import pyart

## You are using the Python ARM Radar Toolkit (Py-ART), an open source

## library for working with weather radar data. Py-ART is partly

## supported by the U.S. Department of Energy as part of the Atmospheric

## Radiation Measurement (ARM) Climate Research Facility, an Office of

## Science user facility.

##

## If you use this software to prepare a publication, please cite:

##

## JJ Helmus and SM Collis, JORS 2016, doi: 10.5334/jors.119

1.1 Set up directories¶

We need to set up the required data directories. The raw radar data will be grabbed from the S3 bucket. We delete any existing files and directories specific to this tutorial to ensure we’re starting with clean directories and files.

# make overall titan directory and application output directory

!mkdir -p ./data/titan/titan

# make directory for output ascii files from TITAN

!mkdir -p ./data/titan/titan/ascii1.2 Set up the environment¶

First, we’ll set some key variables we’ll need throughout the workflow.

# Set directory variable to call LROSE

os.environ["LROSE_DIR"] = "/usr/local/lrose/bin"

os.environ["DATA_DIR"] = "./"1.3 Get data¶

Because some of the preprocessing requires ancillary data, we need to grab and untar that data.

import os

import fsspec

import shutil

# Remote endpoint and path

URL = "https://js2.jetstream-cloud.org:8001/"

path = "pythia/radar/ams2025"

# Initialize filesystem

fs = fsspec.filesystem("s3", anon=True, client_kwargs=dict(endpoint_url=URL))

# Get list of files (example: Ontario Derecho)

files = fs.glob(f"{path}/OntarioDerecho2022/2022052115*.h5")

# Local base directory where you want to store files

local_base = "./radar/raw/derecho"

for remote_file in files:

# Construct local file path, preserving the relative directory structure

rel_path = os.path.relpath(remote_file, start=path)

local_file = os.path.join(local_base, rel_path)

# Ensure parent directories exist

os.makedirs(os.path.dirname(local_file), exist_ok=True)

# Open remote and local files and copy

with fs.open(remote_file, "rb") as fsrc, open(local_file, "wb") as fdst:

shutil.copyfileobj(fsrc, fdst)

print(f"Downloaded {remote_file} -> {local_file}")

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_00_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_00_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_00_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_00_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_06_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_06_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_06_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_06_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_12_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_12_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_12_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_12_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_18_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_18_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_18_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_18_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_24_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_24_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_24_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_24_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_30_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_30_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_30_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_30_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_36_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_36_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_36_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_36_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_42_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_42_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_42_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_42_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_48_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_48_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_48_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_48_ODIMH5_PVOL6S_VOL_CASKR.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_54_ODIMH5_PVOL6S_VOL_CASET.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_54_ODIMH5_PVOL6S_VOL_CASET.h5

Downloaded pythia/radar/ams2025/OntarioDerecho2022/2022052115_54_ODIMH5_PVOL6S_VOL_CASKR.h5 -> ./radar/raw/derecho/OntarioDerecho2022/2022052115_54_ODIMH5_PVOL6S_VOL_CASKR.h5

import os

import fsspec

import shutil

# Remote endpoint and path

URL = "https://js2.jetstream-cloud.org:8001/"

path = "pythia/radar/ams2025/lrose/ams2025/ERA5"

# Initialize filesystem

fs = fsspec.filesystem("s3", anon=True, client_kwargs=dict(endpoint_url=URL))

# Get list of files (example: Ontario Derecho)

files = fs.glob(f"{path}/20220521/20220521_15*")

# Local base directory where you want to store files

local_base = "./ERA5"

for remote_file in files:

# Construct local file path, preserving the relative directory structure

rel_path = os.path.relpath(remote_file, start=path)

local_file = os.path.join(local_base, rel_path)

# Ensure parent directories exist

os.makedirs(os.path.dirname(local_file), exist_ok=True)

# Open remote and local files and copy

with fs.open(remote_file, "rb") as fsrc, open(local_file, "wb") as fdst:

shutil.copyfileobj(fsrc, fdst)

print(f"Downloaded {remote_file} -> {local_file}")

Downloaded pythia/radar/ams2025/lrose/ams2025/ERA5/20220521/20220521_150000.mdv.cf.nc -> ./ERA5/20220521/20220521_150000.mdv.cf.nc

2. Prepare data for analysis¶

The following sections describe two quality control setups and how to run the scripts.

2.1: Option 1 - Apply quality control (QC) on the raw radar data and convert to CfRadial format using RadxConvert¶

In the hail case, there is no significant signal interference.

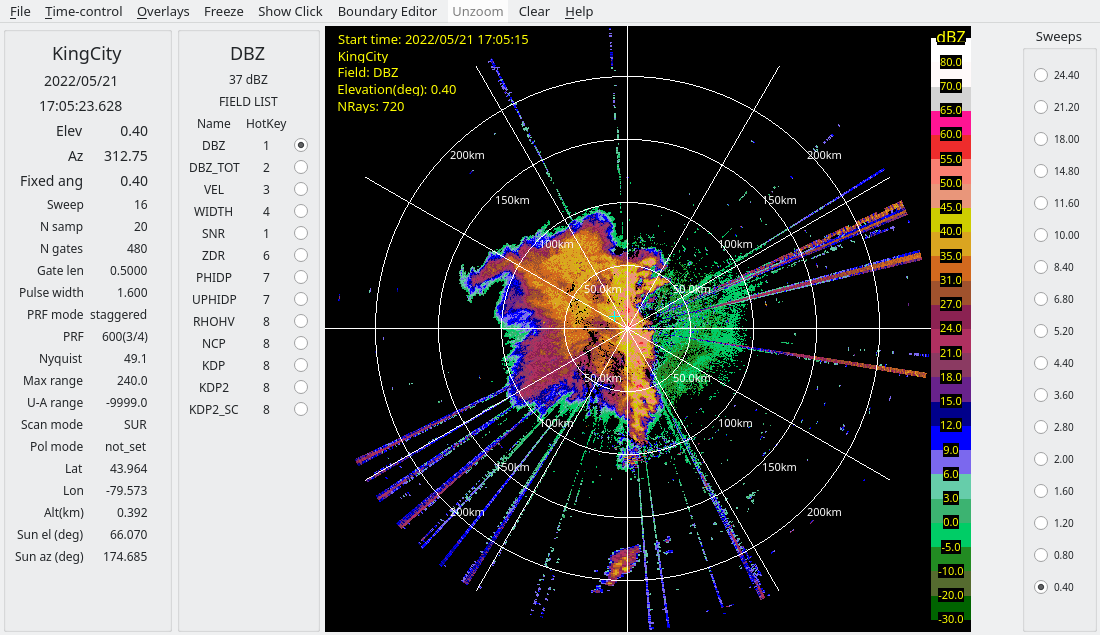

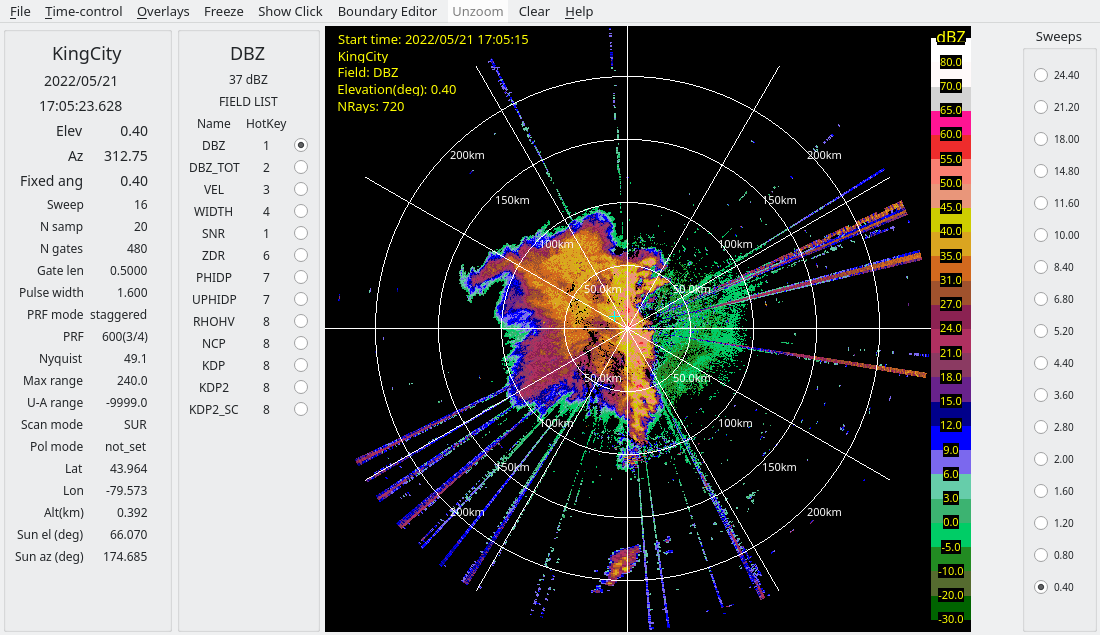

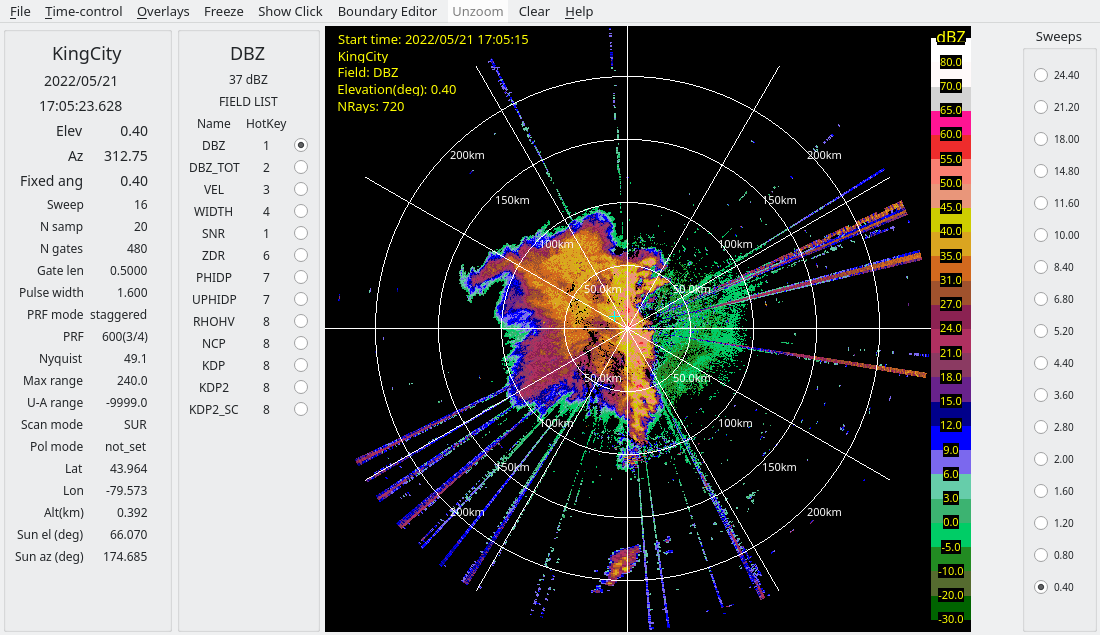

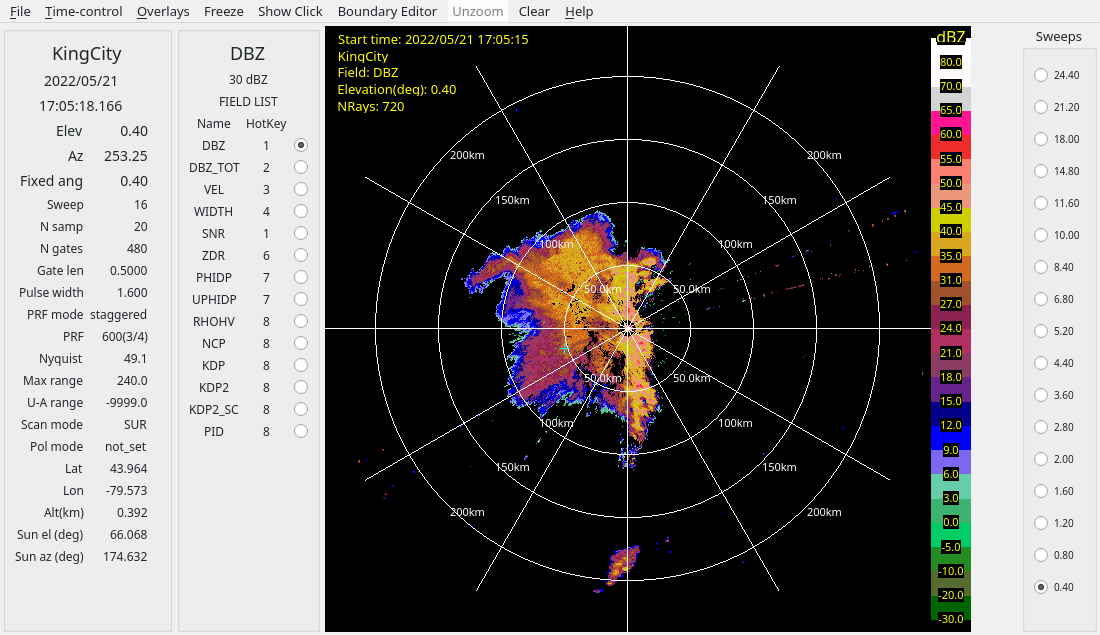

In the derecho case, considerable interference is present, appearing as radial spikes.

Closer inspection of these spikes shows that the interference sources are not coherent with the radars, as indicated by:

Low SQI (NCP)

Moderately low SNR

To address this, we use RadxConvert to censor data fields based on thresholds applied to the input fields. Specifically, data are removed at gates where both conditions are met:

SQI (NCP) < 0.2

SNR < 25 dB

Since later QC steps require signal-to-noise ratio (SNR), the SNR field is derived from reflectivity (DBZ) and added during processing.

Finally, the raw HDF5 files are converted to CfRadial format using RadxConvert with this simple quality control applied.

Run the derecho case QC script:

!$LROSE_DIR/RadxConvert -sort_rays_by_time -const_ngates -params ./params/titan/RadxConvert.qc.derecho -debug -f ${DATA_DIR}/radar/raw/derecho/202205211*CASKR.h5# Run QC on derecho case data

!$LROSE_DIR/RadxConvert -sort_rays_by_time -const_ngates -params ./params/titan/RadxConvert.qc.derecho -f ./radar/raw/derecho/OntarioDerecho2022/202205211*CASKR.h5======================================================================

Program 'RadxConvert'

Run-time 2025/08/24 18:47:57.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

This simple QC removes some of the bad data, as shown by the screenshots below from the derecho case.

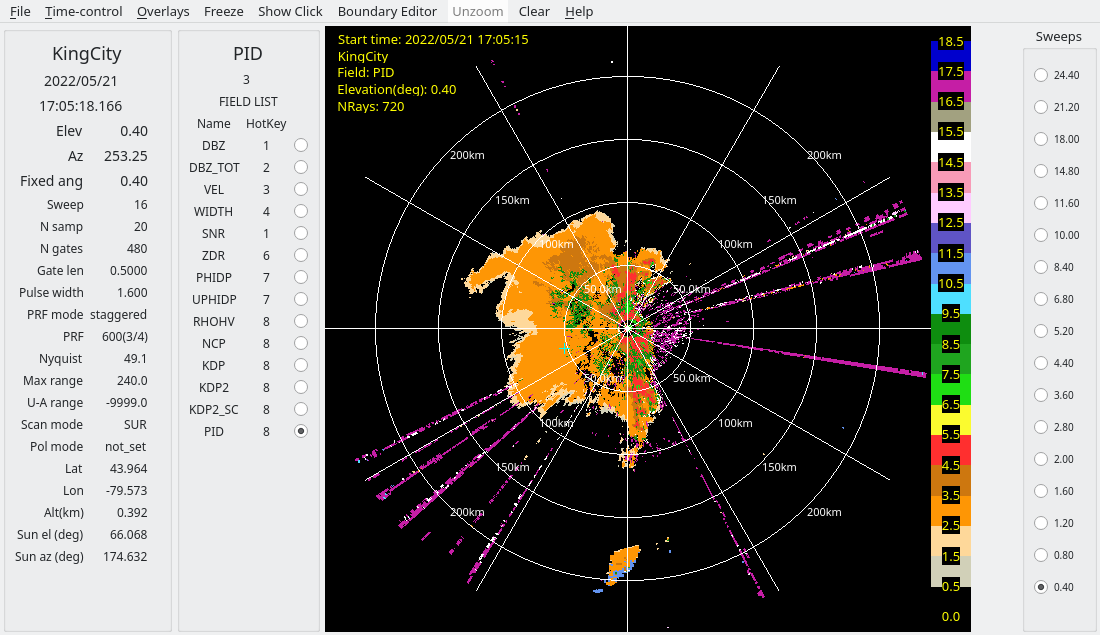

2.2: Option 2 - Computing PID as an alternative method of censoring using RadxPid¶

An alternative method for cleaning up interference is to run RadxPid, and censor non-meteorological echoes.

First, we have to download the ERA5 reanalysis for these cases, and we can use that to save the model-based soundings:

Run the ERA5 sounding scripts:

!$LROSE_DIR/Mdv2SoundingSpdb -debug -params ./params/titan/Mdv2SoundingSpdb.ERA5.derecho -f $DATA_DIR/ERA5/20220521/20220521_*# Get the ERA5 sounding for derecho case data

!$LROSE_DIR/Mdv2SoundingSpdb -debug -params ./params/titan/Mdv2SoundingSpdb.ERA5.derecho -f ./ERA5/20220521/20220521_*======================================================================

Program 'Mdv2SoundingSpdb'

Run-time 2025/08/24 18:48:30.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

Processing file: ./ERA5/20220521/20220521_150000.mdv.cf.nc

Mdvx::readVsection - reading file: ./ERA5/20220521/20220521_150000.mdv.cf.nc

SUCCESS - opened file: ./ERA5/20220521/20220521_150000.mdv.cf.nc

SUCCESS - setting master header

Default time dimension: time

time: 2022/05/21 15:00:00

SUCCESS - setting time coord variable

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: time_bounds

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: time_bounds

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: DIV

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: DIV

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: SH

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: SH

Ncf2MdvTrans::_shouldAddField

-->> adding field: RH

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: RH

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: RH

Adding data field: RH

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> adding field: TEMP

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: TEMP

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: TEMP

Adding data field: TEMP

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> adding field: U

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: U

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: U

Adding data field: U

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> adding field: V

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: V

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: V

Adding data field: V

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> adding field: W

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: W

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: W

Adding data field: W

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: Z

Ncf2MdvTrans::_shouldAddField

-->> rejecting field: Z

Ncf2MdvTrans::_shouldAddField

-->> adding field: height

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: height

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: height

Adding data field: height

time: 2022/05/21 15:00:00

Ncf2MdvTrans::_shouldAddField

-->> adding field: pressure

Ncf2MdvTrans::_shouldAddField

Checking variable for field data: pressure

SUCCESS - var has X coordinate, dim: x0

SUCCESS - var has Y coordinate, dim: y0

NOTE - var has Z coordinate, dim: z0

Ncf2MdvTrans::_addOneField

-->> adding field: pressure

Adding data field: pressure

time: 2022/05/21 15:00:00

Ncf2MdvTrans::addDataFieldsTime elapsed = 0

Ncf2MdvTrans::addGlobalAttrXmlChunk()

Wrote spdb data, URL: .//ERA5/spdb/KingCity

Station name : KING

Sounding time: 2022/05/21 15:00:00

And we can then run RadxPid:

Run the derecho case PID script:

!$LROSE_DIR/RadxPid -params ./params/titan/RadxPid.derecho -debug# Run PID on derecho case data

!$LROSE_DIR/RadxPid -params ./params/titan/RadxPid.derecho -debugCannot stat file for parsing

pid_thresholds.sband.shv: No such file or directory

ERROR: RadxPid

Cannot read params file for NcarPid: pid_thresholds.sband.shv

Error: Could not create RadxPid object.

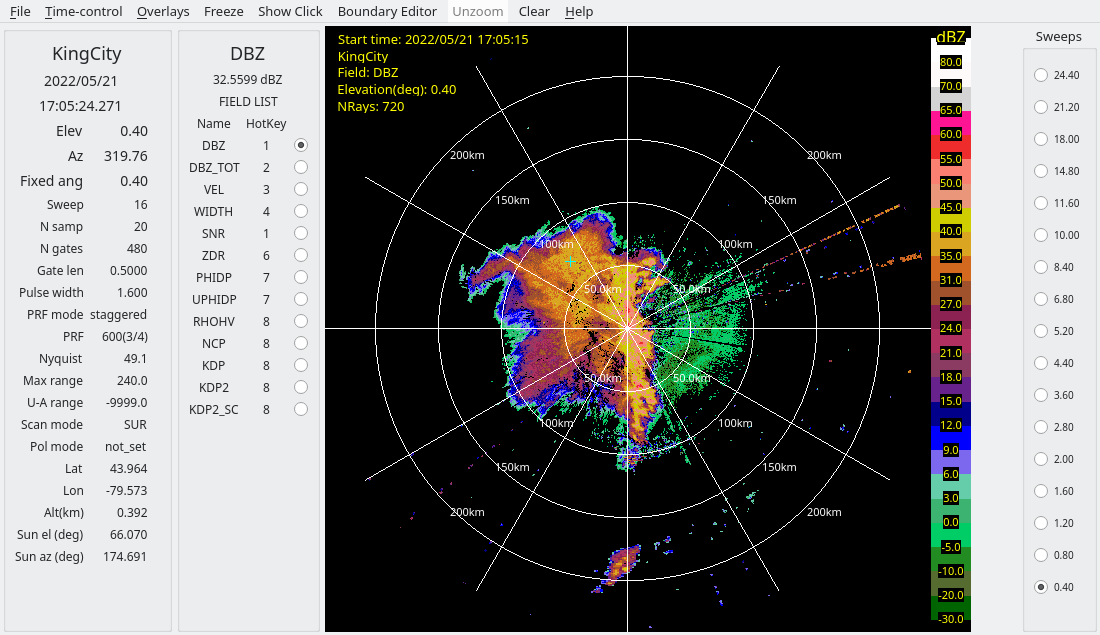

The following shows the PID field for the derecho case:

The interference is identified as clutter in this case.

And the following shows the raw data and after using PID to clean up the reflectivity field:

For this tutorial we will use the QC data created by RadxConvert.

2.3: Convert to Cartesian grid using Radx2Grid¶

Titan requires input data in Cartesian coordinates, rather than polar. To perform this transformation, we run the following to convert the data to Cartesian grid format.

Run the derecho case grid conversion script:

!$LROSE_DIR/Radx2Grid -params ./params/titan/Radx2Grid.derecho -debug# Convert derecho case to Cartesian grid

!$LROSE_DIR/Radx2Grid -params ./params/titan/Radx2Grid.derecho======================================================================

Program 'Radx2Grid'

Run-time 2025/08/24 18:48:31.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

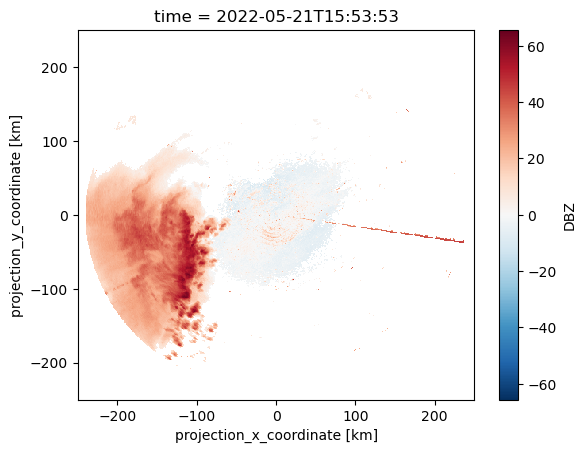

3.1 Run Tobac¶

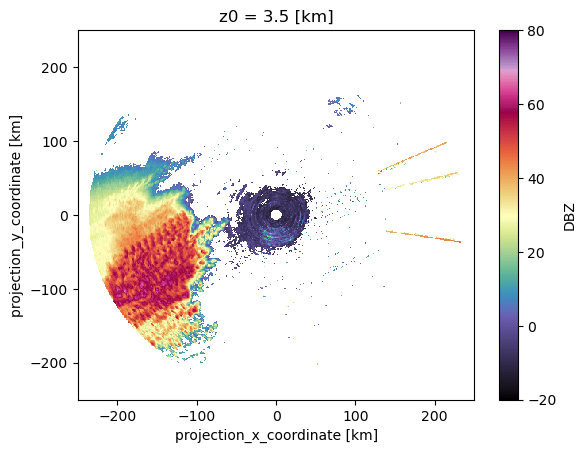

ds = xr.open_mfdataset(glob.glob("./radar/cart/qc/KingCity/20220521/*"))dsrefl_max = ds.DBZ.max(dim=["z0"])

refl_max.isel(time=-1).plot()

import tobac

#Defining the resolution of our data

dxy = 1_000

dt = 5*60

# Keyword arguments for feature detection step:

parameters_features={}

parameters_features['position_threshold']='weighted_diff'

parameters_features['sigma_threshold']=0.5

parameters_features['min_distance']=0

parameters_features['sigma_threshold']=1

parameters_features['threshold']=[30, 40, 50] #m/s

parameters_features['n_erosion_threshold']=0

parameters_features['n_min_threshold']=3

# Perform feature detection and save results:

print('start feature detection based on maximum vertical velocity')

Features=tobac.feature_detection_multithreshold(refl_max,dxy, vertical_cord='z0', **parameters_features)

print('feature detection performed start saving features')

#Features.to_hdf(savedir / 'Features.h5', 'table')

print('features saved')

Features# Keyword arguments for linking step:

parameters_linking={}

parameters_linking['method_linking']='predict'

parameters_linking['adaptive_stop']=0.5

parameters_linking['adaptive_step']=0.95

parameters_linking['extrapolate']=0

parameters_linking['order']=1

parameters_linking['subnetwork_size']=5

parameters_linking['memory']=0

parameters_linking['time_cell_min']=dt

parameters_linking['method_linking']='predict'

parameters_linking['v_max']=15

# Perform linking and save results:

Track=tobac.linking_trackpy(Features,refl_max,dt=dt,dxy=dxy,**parameters_linking)

#Track.to_hdf(savedir / 'Track.h5', 'table')

TrackTrack = Track[Track["cell"] != -1]

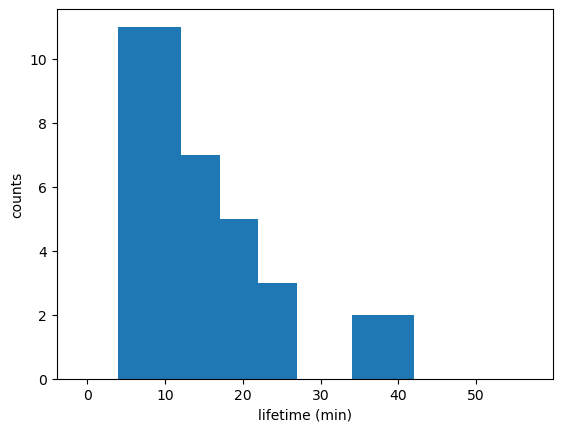

# Updraft lifetimes of tracked cells:

fig_lifetime,ax_lifetime=plt.subplots()

tobac.plot_lifetime_histogram_bar(Track,axes=ax_lifetime,bin_edges=np.arange(0,60,5),density=False,width_bar=8)

ax_lifetime.set_xlabel('lifetime (min)')

ax_lifetime.set_ylabel('counts')

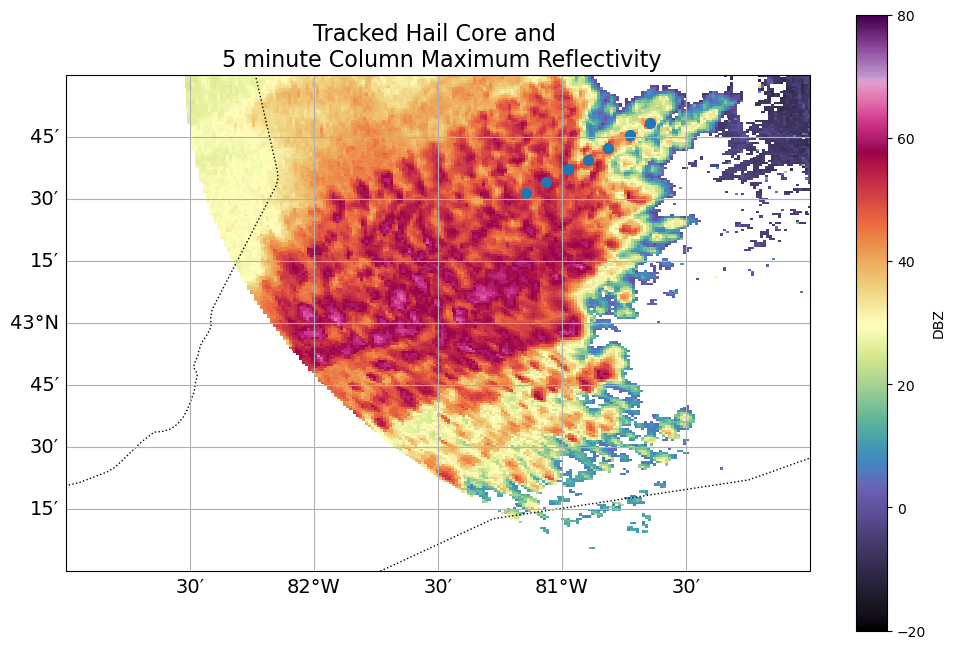

Track[Track.time_cell == Track.time_cell.max()]Track[Track.cell == 43]ds.DBZ.sel(z0=3, method='nearest').max(dim=["time"]).plot(vmin=-20,

vmax=80,

cmap='ChaseSpectral')

ds.sel(time = ds.time[::2])fig = plt.figure(figsize=(12,8))

ax = plt.axes(projection=ccrs.PlateCarree())

ax.set_extent([-83, -80, 42, 44], crs=ccrs.PlateCarree())

ax.coastlines(resolution='10m')

ax.add_feature(cfeature.BORDERS, linestyle=':')

gl = ax.gridlines(draw_labels=True, dms=True, x_inline=False, y_inline=False)

gl.top_labels = False

gl.right_labels = False

gl.xlabel_style = {'size': 14}

gl.ylabel_style = {'size': 14}

ds.DBZ.sel(z0=3,

method='nearest').max(dim=["time"]).plot(x='lon0',

y='lat0',

vmin=-20,

vmax=80,

cmap='ChaseSpectral',

ax=ax)

Track[Track.cell == 43].plot.scatter(x='lon0',

y='lat0',

ax=ax,

s=50)

plt.title("Tracked Hail Core and \n 5 minute Column Maximum Reflectivity", fontsize=16);

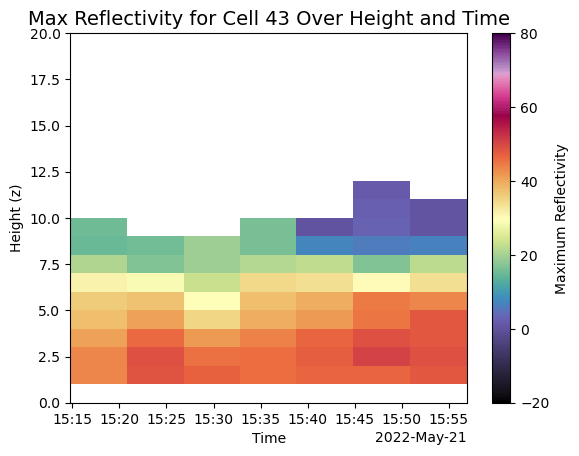

def subset_column(ds, time, x, y):

return ds.sel(time=time, x0=x, y0=y, method='nearest')cell_number = 43

# Filter for a single cell (cell 70)

single_cell = Track[Track.cell == cell_number]

# Subset data at each time step using x, y, and time

ds_list = []

for time in single_cell.time.values:

row = single_cell.loc[single_cell.time == time]

ds_list.append(subset_column(ds, time=row.time.values, x=row.x0.values, y=row.y0.values))

# Concatenate all time slices into a single dataset

single_cell_ds = xr.concat(ds_list, dim='time')

# Plot the max vertical wind component over time and height

plot = single_cell_ds.DBZ.max(["y0", "x0"]).plot(

y='z0',

vmin=-20,

vmax=80,

cmap='ChaseSpectral',

cbar_kwargs={'label': 'Maximum Reflectivity'} # custom colorbar label

)

# Add a custom title and axis labels

plt.title(f"Max Reflectivity for Cell {cell_number} Over Height and Time", fontsize=14)

plt.xlabel("Time")

plt.ylabel("Height (z)")

# Show plot

plt.show()

3. Run TITAN storm tracking¶

Run the TITAN algorithm to identify and track storms.

Titan runs on the Cartesian gridded data, using the DBZ field and optionally the VEL field to compute storm rotation.

Run the derecho case TITAN script:

!$LROSE_DIR/Titan -params ./params/titan/Titan.derecho -start "2022 05 21 12 00 00" -end "2022 05 21 20 00 00" -debug# Run TITAN on derecho case data

!$LROSE_DIR/Titan -params ./params/titan/Titan.derecho -start "2022 05 21 12 00 00" -end "2022 05 21 20 00 00"======================================================================

Program 'Titan'

Run-time 2025/08/24 16:32:56.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

4. Convert TITAN binary output to readable format¶

4.1 Convert TITAN binary output to ASCII format for analysis.¶

Run the derecho case ASCII conversion script:

!$LROSE_DIR/Titan -params ./params/titan/Titan.derecho -start "2022 05 21 12 00 00" -end "2022 05 21 20 00 00" -debug!$LROSE_DIR/Tracks2Ascii -params ./params/titan/Tracks2Ascii.derecho -f ./titan/storms/KingCity/20220521.th5 > ./data/titan/titan/ascii/Tracks2Ascii.derecho.txt======================================================================

Program 'Tracks2Ascii'

Run-time 2025/08/24 16:33:00.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

Processing track file ./titan/storms/KingCity/20220521.th5

# Convert derecho case TITAN output to ASCII

!$LROSE_DIR/Titan -params ./params/titan/Titan.derecho -start "2022 05 21 12 00 00" -end "2022 05 21 20 00 00"======================================================================

Program 'Titan'

Run-time 2025/08/24 16:33:01.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

4.2: Convert TITAN output to NetCDF format¶

Convert TITAN binary output to NetCDF format for further analysis.

Run the derecho case NetCDF conversion script:

!$LROSE_DIR/Tstorms2NetCDF -params ./params/titan/Tstorms2NetCDF.derecho -debug -f $DATA_DIR/titan/storms/KingCity/20220521.sh5# Convert derecho case TITAN output to NetCDF

!$LROSE_DIR/Tstorms2NetCDF -params ./params/titan/Tstorms2NetCDF.derecho -f $DATA_DIR/titan/storms/KingCity/20220521.sh5======================================================================

Program 'Tstorms2NetCDF'

Run-time 2025/08/24 16:33:06.

Copyright (c) 1992 - 2025

University Corporation for Atmospheric Research (UCAR)

National Center for Atmospheric Research (NCAR)

Boulder, Colorado, USA.

Redistribution and use in source and binary forms, with

or without modification, are permitted provided that the following

conditions are met:

1) Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

2) Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

3) Neither the name of UCAR, NCAR nor the names of its contributors, if

any, may be used to endorse or promote products derived from this

software without specific prior written permission.

4) If the software is modified to produce derivative works, such modified

software should be clearly marked, so as not to confuse it with the

version available from UCAR.

======================================================================

5. Plot Output¶

We’ll load the necessary Python packages and plot some of the TITAN output now.

# Import Python packages

import pandas as pd

from datetime import datetime

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import matplotlib.dates as mdates

import cartopy.crs as ccrs

import cartopy.feature as cfeature

from shapely.geometry import Polygonfile = "./data/titan/titan/ascii/Tracks2Ascii.derecho.txt"Open text file and adjust columns names in order to import to a pandas dataframe. Since the text file has irregular delimiters, we need to add some extra steps.

#open file and extract column names

f = open(file)

lines = f.readlines()

f.close()

label_line_index = None

for i, line in enumerate(lines):

if 'labels' in line:

label_line_index = i

break

labels = lines[label_line_index].split(":", 1)[1].strip().split(",")#the data lines are the ones that do not start with #

data_lines = [line.strip() for line in lines if not line.startswith("#")]The file last three rows are labeled “parents”,“children”,"nPolySidesPolygonRays72". Parents and children columns refer to identifiers based on merging and splitting processes. The Polygon column shows the values for the lines from the polygon centroid to each vertex, in km. There are 72 values because each line is separated 5 deg (725 =360). With that information and the “envelope_centroid” column, we can retrieve the cells envelopes at each timestep.

rows = []

for line in data_lines:

parts = line.split()

try:

# Try parsing the polygon count value (always right before 72 values)

poly_count_index = -73 # 72 floats + 1 count (the column starts with the numnber 72, which is not part of the values)

# Parents and children may be missing

parent_str = parts[poly_count_index - 2]

child_str = parts[poly_count_index - 1]

# Handle missing values marked as "-"

parents = int(parent_str) if parent_str != '-' else np.nan

children = int(child_str) if child_str != '-' else np.nan

# Polygon values: skip the count, get the next 72 values

polygon_values = list(map(float, parts[poly_count_index + 1:]))

# Fixed columns

fixed_cols = parts[:poly_count_index - 2]

# Combine into one row

row = fixed_cols + [parents, children, polygon_values]

rows.append(row)

except Exception as e:

continue# Final columns: fixed + 3 custom ones

final_labels = labels[:len(rows[0]) - 3] + ['parents', 'children', 'nPolySidesPolygonRays']

# Create DataFrame

df = pd.DataFrame(rows, columns=final_labels)

# Convert date and time columns to datetime

df['date_utc'] = pd.to_datetime(

df['Year'].astype(str) + '-' +

df['Month'].astype(str).str.zfill(2) + '-' +

df['Day'].astype(str).str.zfill(2) + ' ' +

df['Hour'].astype(str).str.zfill(2) + ':' +

df['Min'].astype(str).str.zfill(2) + ':' +

df['Sec'].astype(str).str.zfill(2),

format='%Y-%m-%d %H:%M:%S',

utc=True

)

# Print df

print(df) NSimpleTracks ComplexNum SimpleNum Year Month Day Hour Min Sec \

0 30 0 0 2022 05 21 14 59 49

1 30 0 1 2022 05 21 14 59 49

2 30 0 1 2022 05 21 15 05 50

3 30 0 2 2022 05 21 14 59 49

4 30 0 3 2022 05 21 14 59 49

.. ... ... ... ... ... .. ... .. ..

69 1 47 47 2022 05 21 15 47 48

70 1 47 47 2022 05 21 15 53 53

71 1 55 55 2022 05 21 15 41 49

72 1 55 55 2022 05 21 15 47 48

73 1 55 55 2022 05 21 15 53 53

dBZThreshold ... HailFOKRCat0-4 HailWaldvogelProb HailMassAloft(ktons) \

0 35 ... 4 1 134.097

1 35 ... 0 0 0

2 35 ... 3 0.8 0.939272

3 35 ... 0 0 0

4 35 ... 2 0.6 0.379067

.. ... ... ... ... ...

69 35 ... 2 0 0

70 35 ... 0 0 0

71 35 ... 0 0 0

72 35 ... 0 0 0

73 35 ... 0 0 0

HailVihm(kg/m2) ReflCentroidRange(km) ReflCentroidAzimuth(deg) parents \

0 4.80436 220.577 -114.922 NaN

1 0 236.347 -125.801 NaN

2 0.77276 228.368 -125.88 NaN

3 0 227.531 -123.188 NaN

4 0.481507 207.533 -118.211 NaN

.. ... ... ... ...

69 0.138608 187.84 -145.078 NaN

70 0 183.49 -146.145 NaN

71 0 207.124 -115.29 NaN

72 0 201.847 -116.488 NaN

73 0 188.001 -115.214 NaN

children nPolySidesPolygonRays \

0 5.0 [50.6, 58.8, 52.3, 53.4, 51.9, 44.7, 29.5, 25....

1 12.0 [3.0, 3.0, 3.0, 3.1, 3.2, 3.3, 3.5, 3.0, 2.7, ...

2 12.0 [4.6, 4.6, 4.7, 4.8, 4.9, 5.1, 4.4, 3.8, 3.4, ...

3 5.0 [2.7, 2.7, 2.7, 2.2, 1.8, 1.8, 1.9, 2.0, 2.2, ...

4 5.0 [4.6, 4.6, 4.6, 4.7, 4.9, 4.7, 4.1, 8.0, 7.8, ...

.. ... ...

69 NaN [3.9, 4.0, 4.0, 4.1, 4.2, 3.6, 3.1, 2.7, 2.4, ...

70 NaN [5.5, 5.5, 4.6, 4.7, 4.8, 4.1, 3.4, 3.0, 2.7, ...

71 NaN [2.6, 2.6, 2.6, 3.7, 3.8, 3.9, 3.9, 3.4, 3.4, ...

72 NaN [2.7, 12.8, 16.0, 18.4, 17.8, 17.4, 16.5, 16.1...

73 NaN [10.6, 12.7, 14.8, 16.2, 15.5, 17.2, 19.5, 18....

date_utc

0 2022-05-21 14:59:49+00:00

1 2022-05-21 14:59:49+00:00

2 2022-05-21 15:05:50+00:00

3 2022-05-21 14:59:49+00:00

4 2022-05-21 14:59:49+00:00

.. ...

69 2022-05-21 15:47:48+00:00

70 2022-05-21 15:53:53+00:00

71 2022-05-21 15:41:49+00:00

72 2022-05-21 15:47:48+00:00

73 2022-05-21 15:53:53+00:00

[74 rows x 80 columns]

Let’s explore the TITAN output now!

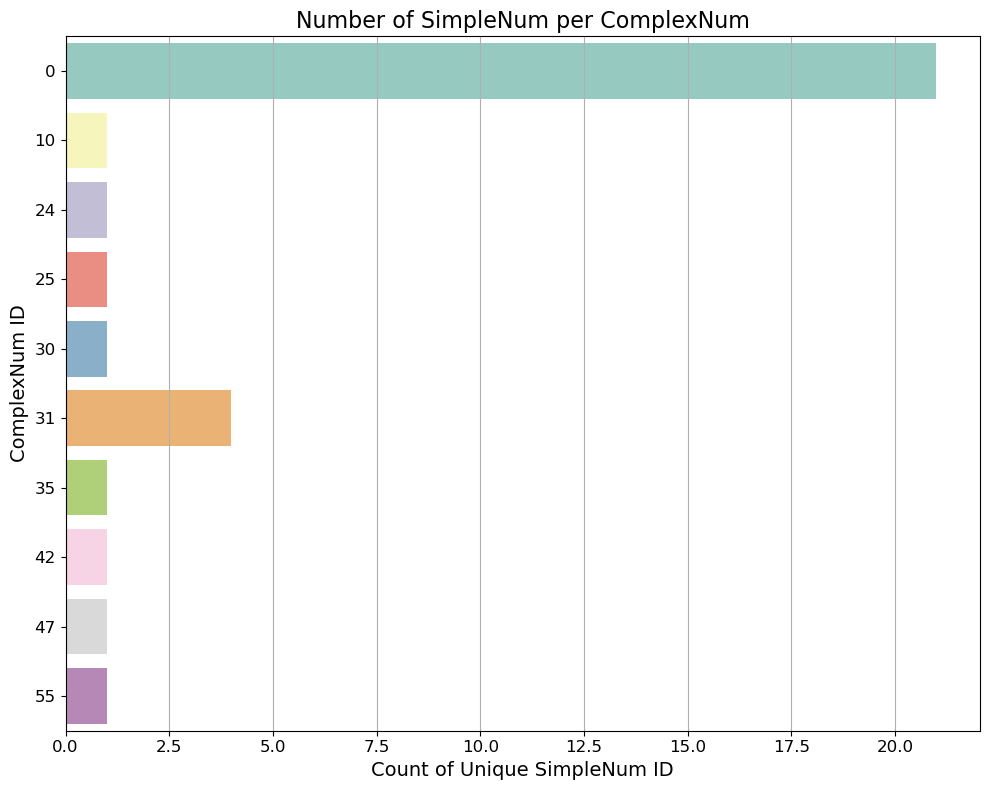

How does TITAN work?¶

TITAN identifies individual radar cells within every radar volume , based on reflectivity and volume thresholds. Then, it tracks them through time using a combination and optimization scheme, and geometric logic to address splitting and merging storms. In this example, we have set the minimum reflectivity threshold to 35 dBZ. This is shown in column ‘dBZThreshold’. That threshold defines the minimum reflectivity for our ‘cell’ entity.

What is the TITAN output?¶

TITAN outputs cell fetures at each tracking timestep, and identifies cells within major systems, based on their interaction with neighboring cells. Therefore, each cell will have a simple and a complex identifier (“SimpleNum” and “ComplexNum”) in the TITAN output text file. For example, if we are tracking a multicell system, all the individual cells tracked within the major system will have different “SimplNum” identifiers, however, they will al have the same “ComplexNum” identifier (the main multicell system).

Let’s inspect our derecho case now! How many Complexes we can identify? Which one contains more tracks (e.g., single cell tracks, and split/merge processes)?

# Count number of unique SimpleNum per ComplexNum

simple_counts = df.groupby('ComplexNum')['SimpleNum'].nunique().reset_index(name='NumSimple')

# Sort (optional, for better visuals)

#simple_counts = simple_counts.sort_values(by='NumSimple', ascending=False)

# Plot 1

plt.figure(figsize=(10, 8))

sns.barplot(y='ComplexNum', x='NumSimple', data=simple_counts, palette='Set3')

plt.title('Number of SimpleNum per ComplexNum', fontsize=16)

plt.xlabel('Count of Unique SimpleNum ID', fontsize=14)

plt.ylabel('ComplexNum ID', fontsize=14)

plt.xticks(fontsize=12)

plt.yticks(fontsize=12)

plt.tight_layout()

plt.grid(axis='x')

plt.show()

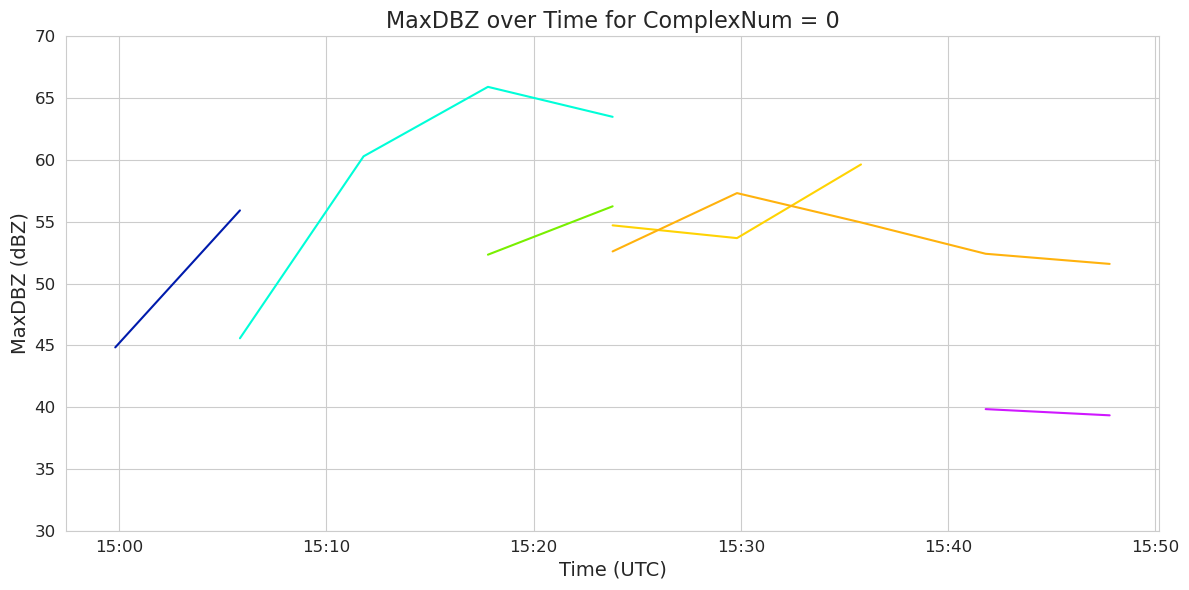

# Filter dataframe for ComplexNum == 0 and sort by time

df0 = df[df['ComplexNum'] == "0"].copy()

df0 = df0.sort_values('date_utc')

df0['MaxDBZ(dBZ)'] = pd.to_numeric(df0['MaxDBZ(dBZ)'], errors='coerce')

df0['date_utc'] = pd.to_datetime(df0['date_utc'], errors='coerce', utc=True)

# Plot

y_min = 30

y_max = 70

y_ticks = np.arange(y_min, y_max + 1, 5)

plt.figure(figsize=(12, 6))

sns.set_style("whitegrid")

sns.lineplot(data=df0, x='date_utc', y='MaxDBZ(dBZ)', hue='SimpleNum', palette='gist_ncar')

plt.ylim(y_min, y_max)

plt.yticks(y_ticks,fontsize=12)

plt.gca().xaxis.set_major_formatter(mdates.DateFormatter('%H:%M'))

plt.xticks(fontsize=12)

plt.title('MaxDBZ over Time for ComplexNum = 0', fontsize=16)

plt.xlabel('Time (UTC)', fontsize=14)

plt.ylabel('MaxDBZ (dBZ)',fontsize=14)

# Remove legend

plt.legend([], [], frameon=False)

plt.tight_layout()

plt.show()

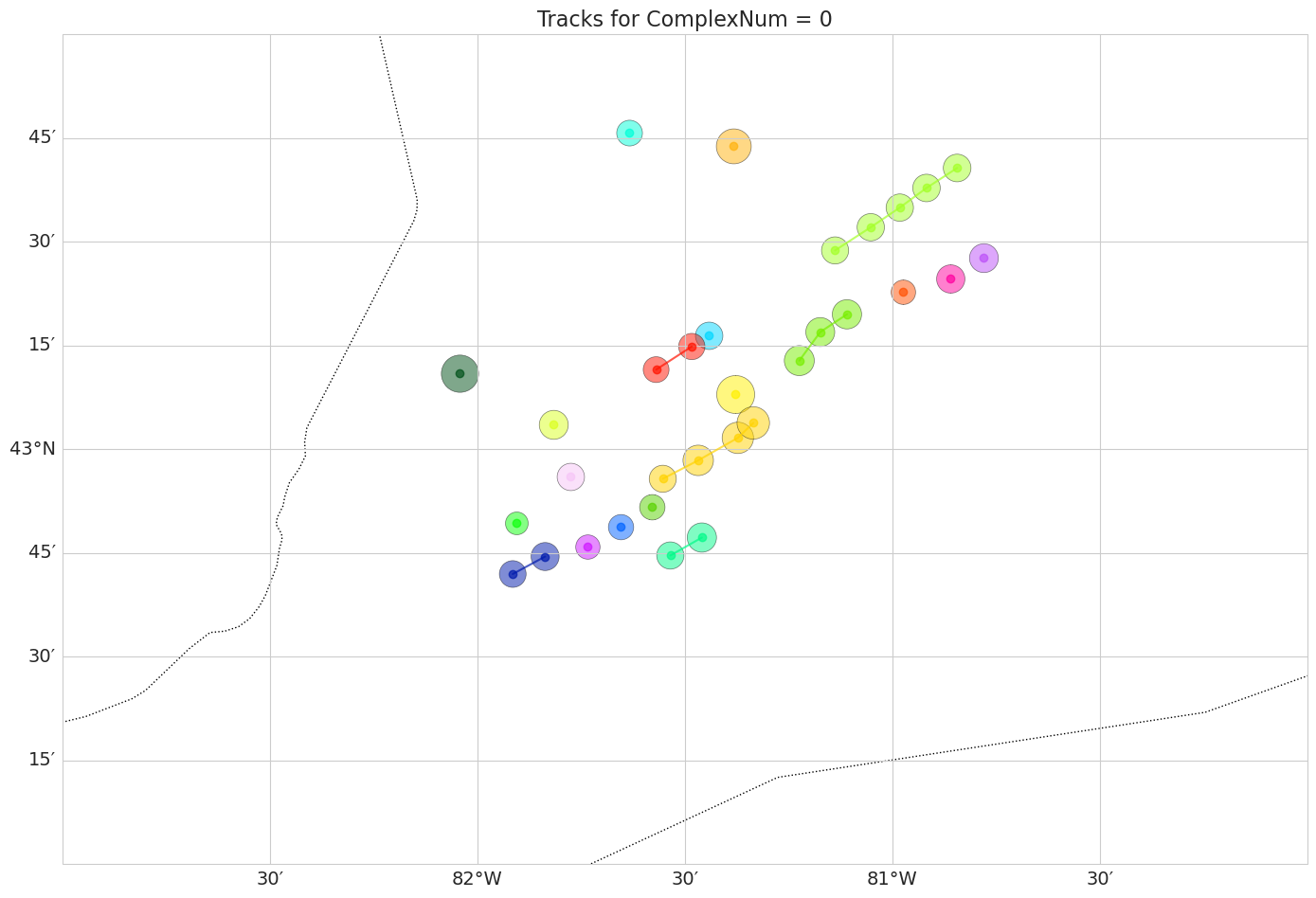

Now we can also plot the centroids of each tracked cell, in a Cartopy map, and add circles around the centroid based on how big the cell volume is in each timestep. We will also show the different cells (‘SimpleNum’) in different colors.

df0 = df0.sort_values(['SimpleNum', 'date_utc'])

# Convert lat/lon columns to numeric, coercing errors to NaN

df0['VolCentroidLat(deg)'] = pd.to_numeric(df0['VolCentroidLat(deg)'], errors='coerce')

df0['VolCentroidLon(deg)'] = pd.to_numeric(df0['VolCentroidLon(deg)'], errors='coerce')

# Set up map plot

plt.figure(figsize=(14, 10))

ax = plt.axes(projection=ccrs.PlateCarree())

ax.set_extent([-83, -80, 42, 44], crs=ccrs.PlateCarree())

ax.coastlines(resolution='10m')

ax.add_feature(cfeature.BORDERS, linestyle=':')

gl = ax.gridlines(draw_labels=True, dms=True, x_inline=False, y_inline=False)

gl.top_labels = False

gl.right_labels = False

gl.xlabel_style = {'size': 14}

gl.ylabel_style = {'size': 14}

# Unique SimpleNum and colors

simple_nums = df0['SimpleNum'].unique()

palette = sns.color_palette("gist_ncar", n_colors=len(simple_nums))

df0['Volume(km3)'] = pd.to_numeric(df0['Volume(km3)'], errors='coerce').fillna(0)

# We normalize the Volume for the marker sizes

for i, simple_num in enumerate(simple_nums):

track = df0[df0['SimpleNum'] == simple_num].copy()

lat = track['VolCentroidLat(deg)']

lon = track['VolCentroidLon(deg)']

vol = pd.to_numeric(track['Volume(km3)'], errors='coerce').fillna(0)

# Scale volume: use log scale to compress range + clip to reasonable range

sizes = np.log10(vol + 1) * 200 # +1 to avoid log(0)

#sizes = sizes.clip(10, 300)

ax.plot(lon, lat, marker='o', linestyle='-', color=palette[i], alpha=0.7, transform=ccrs.PlateCarree())

ax.scatter(lon, lat, s=sizes, color=palette[i], alpha=0.5, transform=ccrs.PlateCarree(), edgecolor='k', linewidth=0.5)

#plt.legend(title='SimpleNum', bbox_to_anchor=(1.05, 1), loc='upper left')

plt.yticks(fontsize=12)

plt.xticks(fontsize=12)

plt.title('Tracks for ComplexNum = 0', fontsize= 16)

plt.tight_layout()

plt.show()

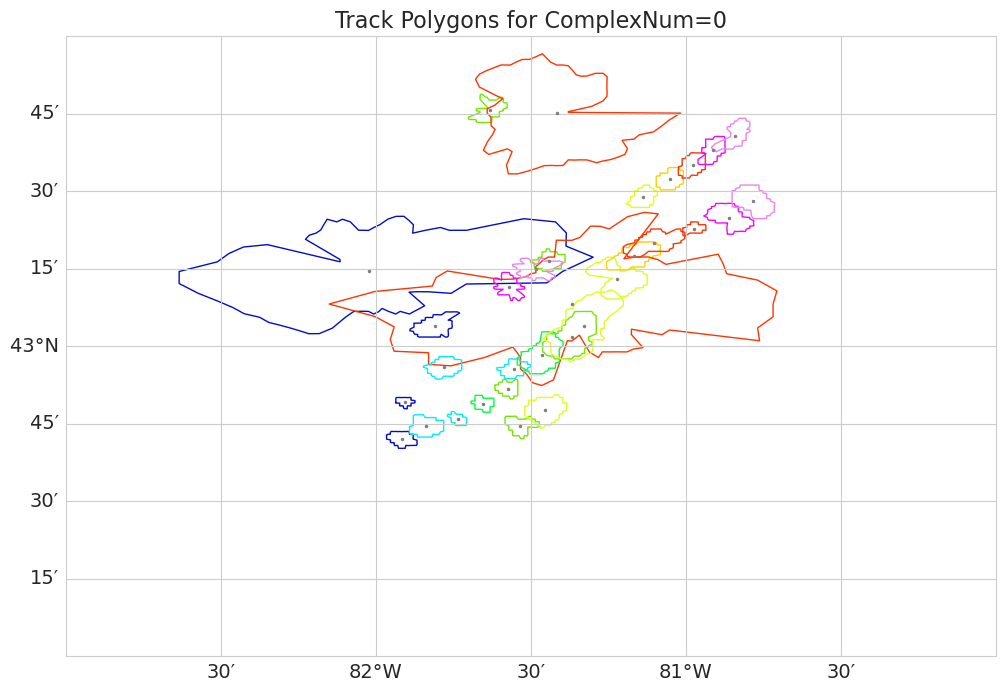

fig, ax = plt.subplots(figsize=(12, 12),

subplot_kw={'projection': ccrs.PlateCarree()})

# Set the map extent (lon_min, lon_max, lat_min, lat_max)

ax.set_extent([-83, -80, 42, 44], crs=ccrs.PlateCarree())

ax.coastlines()

#color by time

timesteps = df0['date_utc'].unique()

palette = sns.color_palette("gist_ncar", n_colors=len(timesteps))

gl = ax.gridlines(draw_labels=True, dms=True, x_inline=False, y_inline=False)

gl.top_labels = False

gl.right_labels = False

gl.xlabel_style = {'size': 14}

gl.ylabel_style = {'size': 14}

for idx, row in df0.iterrows():

lat_centroid = float(row['EnvelopeCentroidLat(deg)'])

lon_centroid = float(row['EnvelopeCentroidLon(deg)'])

rays = row['nPolySidesPolygonRays']

if not rays or len(rays) == 0:

continue

angles = np.deg2rad(np.arange(0, 360, 5)) # 72 vertices at every 5 degrees

rays = np.array(rays, dtype=float) #from centroid to vertex

# Rays in km to degrees

ray_x = rays * np.cos(angles)

ray_y = rays * np.sin(angles)

# Approximate conversion from km to degrees lat/lon

lat_vertices = lat_centroid + ray_y / 111

lon_vertices = lon_centroid + ray_x / (111 * np.cos(np.deg2rad(lat_centroid)))

polygon_points = list(zip(lon_vertices, lat_vertices))

poly = Polygon(polygon_points)

time_idx = np.where(timesteps == row['date_utc'])[0][0]

ax.add_geometries([poly], crs=ccrs.PlateCarree(),

edgecolor=palette[time_idx], facecolor='none', linewidth=1)

ax.plot(lon_centroid, lat_centroid, marker='o',color='grey', markersize=1.5, transform=ccrs.PlateCarree())

plt.title("Track Polygons for ComplexNum=0", fontsize=16)

plt.show()

Afternoon Project¶

To run TITAN on the hail case, the previous commands just need to be recreated using the hail parameter files. We’ve summarized the commands below.

Convert the data.

! LROSE_DIR/RadxConvert -sort_rays_by_time -const_ngates -params ./params/titan/RadxConvert.qc.hail -debug -f {DATA_DIR}/radar/raw/hail/202408060*h5Prepare the sounding data.

! LROSE_DIR/Mdv2SoundingSpdb -debug -params ./params/titan/Mdv2SoundingSpdb.ERA5.hail -f DATA_DIR/ERA5/20240806/20240806_0*Run the PID.

!$LROSE_DIR/RadxPid -params ./params/titan/RadxPid.hail -debugGrid the data on a Cartesian grid.

!$LROSE_DIR/Radx2Grid -params ./params/titan/Radx2Grid.hail -debugRun TITAN.

!$LROSE_DIR/Titan -params ./params/titan/Titan.hail -start “2024 08 06 00 00 00” -end “2024 08 06 06 00 00” -debugConvert the tracks to an ASCII file.

! LROSE_DIR/Tracks2Ascii -params ./params/titan/Tracks2Ascii.hail -f ~/data/ams2025/titan/storms/Strathmore/20240806.th5 > DATA_DIR/titan/ascii/Tracks2Ascii.hail.txtConvert the output to NetCDF.

! LROSE_DIR/Tstorms2NetCDF -params ./params/titan/Tstorms2NetCDF.hail -debug -f DATA_DIR/titan/storms/Strathmore/20240806.sh5

Play with the reflectivity or minimum area thresholds¶

If you’re curious how the parameter choices affect the analysis, feel free to play around with different values of the reflectivity and minimum area thresholds.

These are in the ./params/titan/Titan.hail and ./params/titan/Titan.derecho parameter files, which you can edit directly in JupyterLab.

- Dixon, M., & Wiener, G. (1993). TITAN: Thunderstorm Identification, Tracking, Analysis, and Nowcasting—A Radar-based Methodology. Journal of Atmospheric and Oceanic Technology, 10(6), 785–797. https://doi.org/10.1175/1520-0426(1993)010<;0785:ttitaa>2.0.co;2